By keeping your video chat experience safe and enjoyable, you can build more trust and loyalty within your user base, which can increase retention and boost sales for your product.

1. Use Third-Party Authentication APIs

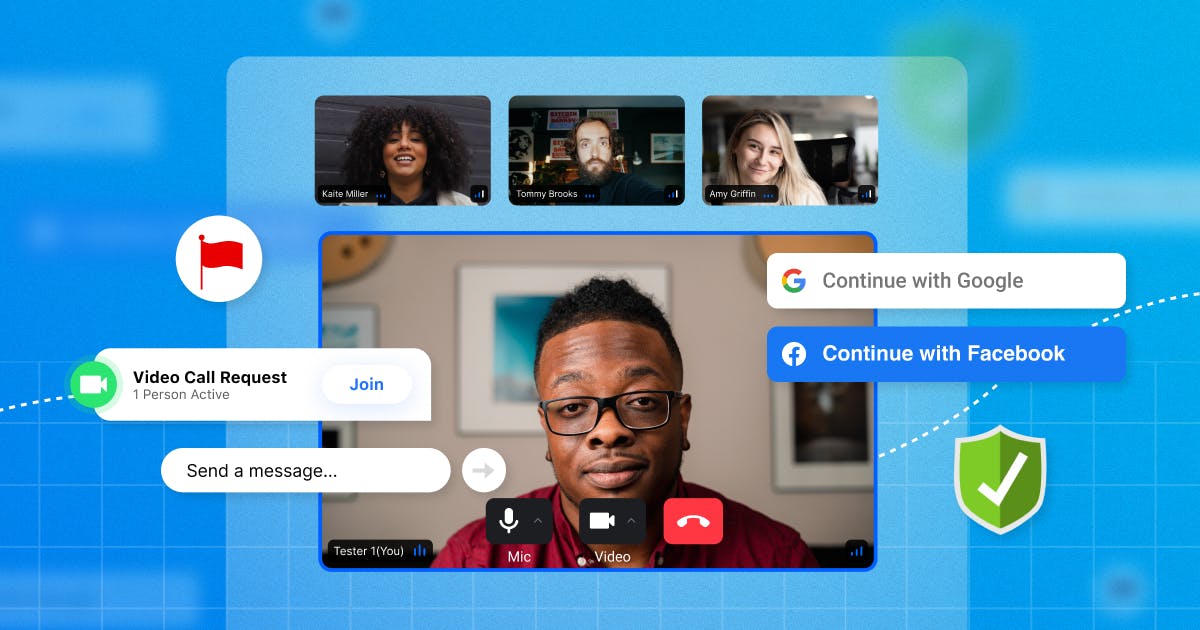

Third-party authentication can enforce secure login standards while preserving your users' experiences. Using these APIs allows users to log in through another established account like Google, Facebook, or Apple. It's also convenient for the user — they have one less login to fill out or remember and one less company to give their information to.

For you, using a third-party API for authentication means less liability if a data breach occurs. The impact on your business isn't as significant or detrimental as when the data you directly collect from your customers is compromised.

You can adopt and implement third-party authentication from Google, Facebook, or another source. Once you've adopted an API, you can add buttons to the login page for users to sign in using other applications (e.g., "Sign in with Google" or "Sign in with Facebook").

The downside to third-party authentication is that the user might not have an account with the providers you offer them. If they don't have an established account, they'll have to create one to sign in to your application, and that could deter them from using it. While your third-party authentication service provider will handle this process for you, you can avoid potential user deterrence by offering users multiple options of third-party apps to sign in through.

2. Enable Text Chat

Text chats help to initiate and supplement video chat conversations and give users a secondary way to communicate if any issues arise. Text chats are especially useful for moderating a large audience. When someone hosts a video for a large audience, it can be difficult to see and address everyone. With a text chat, it's more visible when someone needs assistance or help.

Build or adopt a function using an API — like Stream — for text message chatting that is connected to the video stream. If you opt for an API, use one that is fully customizable, so you can put the privacy and safety controls in your users' hands.

Include a function that lets users contact just the moderator or video host rather than having to send a message to the entire audience. If a user has private or sensitive information, they should be able to communicate that in a one-on-one chat with a chat administrator.

It would also be valuable to have a language-translating function, so regardless of the user's language, they can still get assistance when they need it. Use a translation API, like Google Cloud Translation or DeepL, that has high accuracy and is easily integrated into your application.

3. Enable Avatars and/or Background Blurring to Anonymize the Live-Streaming Experience

Some people prefer to remain anonymous during live streams. Avatars and blurry background options help people preserve their anonymity, so they don't have to show their face or surroundings to strangers.

You'll need to adopt plugins (for either avatars, background blurring, or both) so that when your users log in, they can go to their video settings and choose an avatar or choose to blur their background.

There are plugins for both background blurring and avatars. Avatar software will sync a 3D avatar with a real person (a live streamer) and overlay the image on top of their face and body. The software uses video to capture the streamer's motions and move the avatar along with the streamer's body and expressions.

Background blurring technology takes a live feed, detects the background, and applies a blur to it. Programmers can even control the amount of blur that a background has or set it as entirely opaque.

If you use plugins, make sure they are secure for your users. Assess what permissions each plugin is asking for and decline permissions if something seems suspicious or unsafe for your end users.

4. Moderate Content to Mitigate Bad Behavior

You can moderate specific content to identify and mitigate bad behavior, including harassment, hate speech, and bullying. Your app's reputation is at stake — if your app becomes known for allowing harassment, it can become costly when users decide to stop streaming on your app and use a different one.

Traditionally, developers would handle content moderation in chats by giving control to the users to block certain words or language or ban certain people when they become offensive. The maintenance for this kind of moderation can be time-consuming for developers to stay on top of. As bad behavior and harmful language evolve, your moderation has to evolve with them.

Now, there are new AI tools that aim to automatically identify when bad behavior is occurring (on both video and chat). For example, Spectrum Labs, an AI content moderation tool, uses Natural Language Understanding (NLU) to identify potentially bad behavior and take action against the users committing the behavior.

One thing to be cautious of is the imperfectness of AI. AI tools of this nature are relatively new, so they may flag content or behavior by mistake. For example, a user's last name is "Gay," but the AI tool identifies it as a homophobic slur.

Each option has its advantages and disadvantages — AI may be more efficient but more expensive; traditional methods may be more cost-effective but too time-consuming. You have to identify which route works for your developers and the rest of the product team.

5. Implement Privacy Controls When Searching for Other Users

Users should be able to control how others can find them and which information is visible and searchable. Your users want to be able to engage with content and communities but also feel safe knowing that random or potentially harmful people can't contact them without their consent. Each person's boundaries will be different, so your features should be as flexible as possible.

There are a few functions to focus on to implement privacy controls that each user can change on their own.

Implement settings that allow users to share certain information that they do want to show but hide information they don't want to share. For example, many users might want to show their birthday without the year but not their location, while other users will opt to share their location. Users should also be able to control whether or not their profile shows that they're online — some users will prefer not to show their active status.

In addition to dictating what information is visible, allow users to define how they can be searched. For example, some folks are fine with being searchable by their name, phone number, and/or email address, whereas other users only want to be found by their name and nothing else. Others will not want to be found at all.

Visibility functions can also help improve privacy. Allow users to choose if they're only visible to "friends" or if they can be found by "friends of friends" or "any user."

Safety Is Key For Successful Live Stream Chat Moderation

To keep your users happy, they have to feel confident that their information and privacy are protected. To build an application that your users will keep coming back to, it needs the right functions and features to ensure their safety — functions that let them control how other users interact with them and find them on your application and features, like third-party authentication, that make it convenient for them to use your application.

Stream's video calling API makes safety a top priority and features full end-to-end encryption. With Stream's API, you can fully customize it to be up to your audience's security standards. Plus, all video calls through Stream's API are SOC 2, HIPAA, and ISO 27001 compliant.

Try Stream for free to find out if it's the right API for your video application.