Large Language Models (LLMs) are becoming increasingly prevalent, and many developers are integrating various LLM providers, such as OpenAI, Anthropic, Gemini, and others into their Stream Chat applications across multiple SDKs. To help developers get started and accelerate their development with Stream Chat and AI, we've created a platform-specific, step-by-step guide. To follow along, we recommend creating a free Stream account and selecting an LLM provider.

Our open-source examples include AI integrations with OpenAI and Anthropic by default. However, developers can easily integrate any LLM service by modifying the server-side agent, while still enjoying all the UI features that Stream's Chat UI Kits offer.

How to Create an AI Assistant

Conversational AI is transforming how users interact with applications. Using AI assistants, apps can now introduce highly personalized agents into their apps, changing how users interact with applications, automate tasks, or solve problems. At Stream, some use cases we've seen include apps trained on nutrition information to help users quickly learn details on the foods they are eating and specialized education apps for different categories.

Creating AI agents can be tricky. Users today expect a high level of polish from their apps, and things like streaming responses, table components, thinking indicators, code and file components, etc., can be hard to implement across different SDKs.

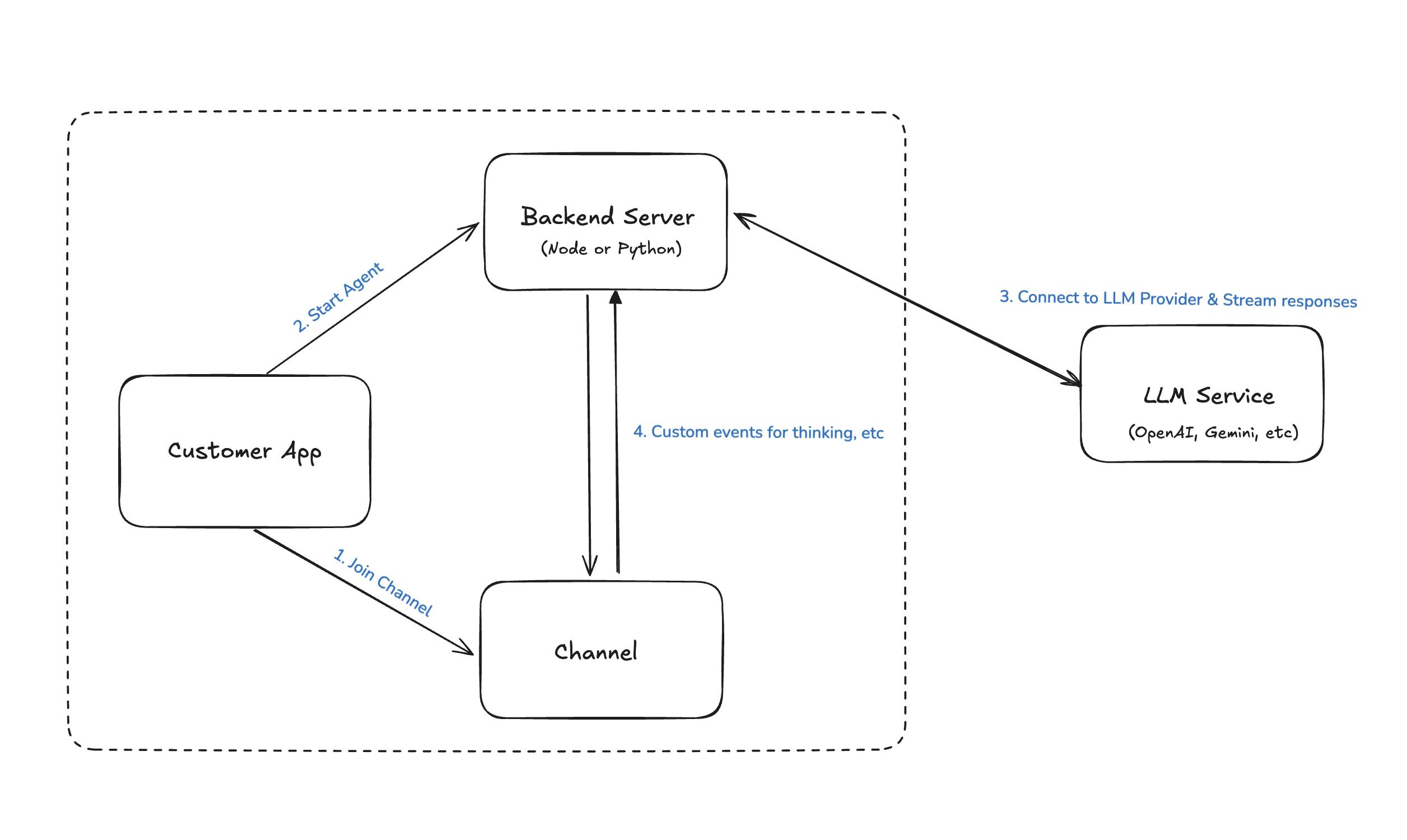

To simplify this process for developers, we've built a generic approach for connecting our Chat API to external LLM providers. Our approach uses a single backend server (NodeJS or Python), which can manage the lifecycle of agents and handle the connection to external providers.

By using this approach, developers can subclass our Anthropic Agent and OpenAI Agent interfaces to add support to whichever provider they prefer and feel confident that the frontend parts of their application will scale and render these responses correctly.

Choose Your Platform

To get started building with AI and Stream, simply pick the framework most suited to your application and follow the step by step instructions outlined in each:

- Flutter: Create a seamless AI chat assistant for cross-platform apps using Stream's Flutter SDK.

- React Native: Step into the world of AI-enhanced chat apps with Stream's React Native SDK and our tutorial.

- React: Develop chat assistants for web applications with Stream's React chat SDK.

- Android: Build powerful and responsive assistants for Android applications with Stream's Android chat SDK.

- iOS: Learn how to create an assistant for iOS apps with Stream's iOS chat SDK.

- NodeJS: Develop AI assistants for web applications with Stream's NodeJS chat SDK.

To learn more about the backend setup, check out the readme directly linked in our Github repository. It covers everything from the project architecture to ways the integration can be extended to support other LLM providers.

Start Building Today

Ready to take it for a spin? Choose your platform and give it an assistant a shot! Our team also created a helpful repo with examples for each SDK including a sample backend implementation — check it out and leave us a ⭐if you found it helpful.

Our team is always active on different social channels so once you're finished with your app, feel free to tag us on X or LinkedIn and let us know how it went!